Hallucinations in Legal Content: Red Flags & Fixes

Hallucinations are confident-sounding fabrications. Legal prompting should reduce the chance of hallucination and make hallucinations easier to detect.

How to reduce hallucinations up front

- Tell the model to use only provided text (for summaries/extractions).

- Require it to label assumptions and uncertainties.

- Ask for citations only when you provide a source set.

- Use retrieval (RAG) or a legal database workflow when possible.

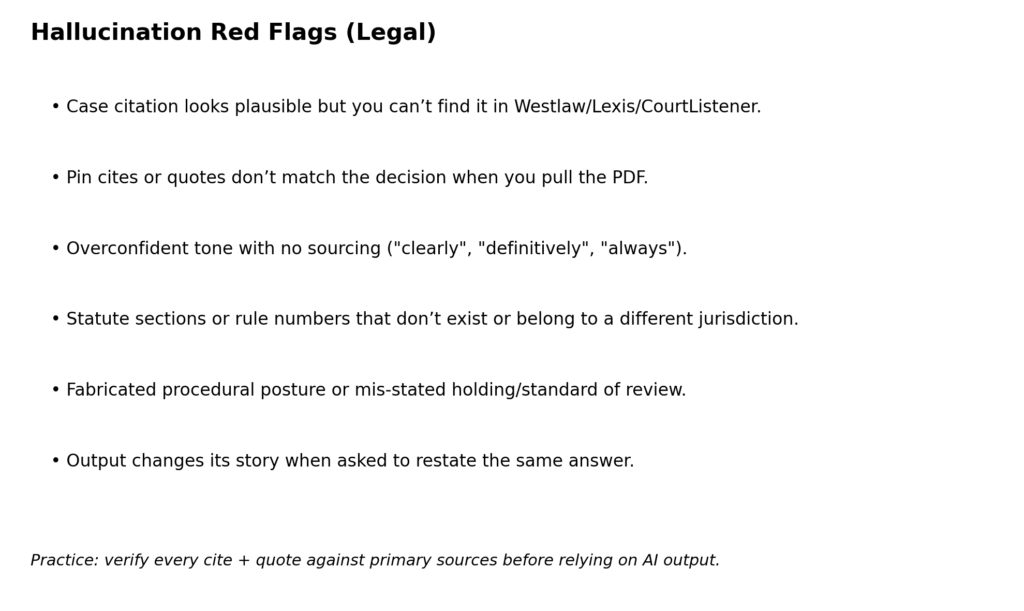

How to detect hallucinations

Use the red flags chart above, and always spot-check against primary sources. If a case cannot be found, treat it as fabricated until proven otherwise.

A “verify-first” prompt pattern

List all citations and quotations you used. For each, include: source name, pinpoint cite, and a short quote. If you are not sure, write: "UNCERTAIN" and do not guess.