What LLMs Are (and Aren’t): A Lawyer‑Friendly Mental Model

Large Language Models (LLMs) generate text by predicting the next likely word/token. They can sound confident even when they’re wrong. Understanding this is key to using them safely.

A useful mental model

Think of an LLM as a pattern engine: it is trained on large amounts of text and learns statistical relationships between words and phrases. It does not “know” the law the way a lawyer does; it generates plausible text based on patterns.

Why LLMs can hallucinate

Because the model is predicting text, it may generate details that fit the pattern but are not true—such as non-existent cases, wrong dates, or made-up quotations. This is why citation and fact validation must be baked into your workflow.

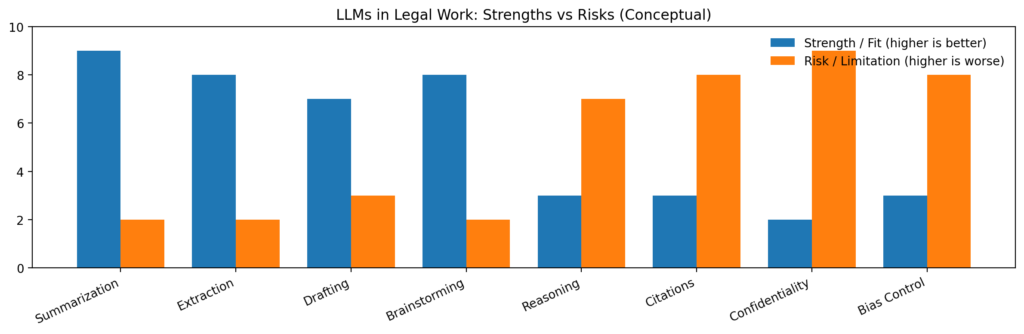

What LLMs are best for in law

- Summarizing long documents and extracting key facts

- Generating first drafts (emails, memos, outlines)

- Brainstorming issue lists and counterarguments

- Rewriting for clarity, tone, and structure

What LLMs are NOT reliable for (without guardrails)

- Providing final legal conclusions without primary-source validation

- Producing perfect citations out of thin air

- Handling privileged or confidential client info in public tools

Try it

Prompt:

You are a legal assistant. In 5 bullets, explain what an LLM is to a busy litigation partner. Include (1) one strength, (2) one limitation, and (3) one safety rule for using it in legal work.

Then refine: Ask the model to rewrite the answer for (a) a client and (b) a new associate. Compare tone and risk statements.